FFWD

- AI and automation increase operational efficiency but can cause rapid, large-scale, and hard-to-detect data loss when errors occur.

- Breaches involving AI are costly, with shadow AI adding an average of $670K to incidents and one in six breaches now AI-driven.

- Preventing AI-driven data loss requires frequent and granular backups, long-term retention, cross-platform coverage, and a tested disaster recovery plan.

Artificial intelligence and automation are transforming SaaS workflows faster than ever. They speed up processes, reduce manual effort, and drive operational growth. But with that power comes risk: when AI agents or automated scripts go off the rails, the consequences can be swift and silent. Unlike human errors, which may prompt immediate attention, these automated mistakes unfold at machine speed and often go unnoticed until it is too late.

The financial stakes are high. According to IBM’s 2025 Cost of a Data Breach report, the global average cost of a data breach is USD $4.44M. In the United States, that figure surges to an all-time high of USD $10.22M.

AI is increasingly part of the story. 13% of organizations reported breaches involving their own AI models or applications, and an overwhelming 97% of those lacked proper AI access controls. The rise of “shadow AI” (unapproved or unmonitored AI in use) adds an average of USD $670,000 to breach costs, often resulting in the compromise of sensitive personal or intellectual property data. For attackers, AI is also a tool of choice, with one in six breaches now involving AI-driven attacks such as deepfakes or AI-generated phishing.

As innovation accelerates, organizations face a troubling paradox. The very tools designed to increase efficiency may be quietly creating the conditions for large-scale data loss. Without clear visibility, rapid recovery, and proactive safeguards, AI automation can quickly become the trigger for a costly and preventable disaster.

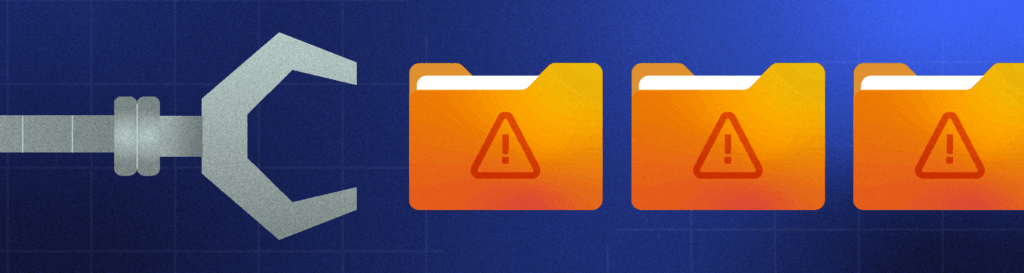

How AI and automation create invisible risks

AI agents and automation scripts work fast and without constant oversight. That speed is not only their biggest strength, but it’s their biggest weakness when something goes wrong. A small misconfiguration can instantly trigger widespread, hard-to-spot data loss.

Why these risks are different from human error:

- Speed and scale: Mistakes execute across entire systems in seconds.

- Low visibility: Changes may be buried in logs or untracked entirely.

- Integrated impact: Errors in one app can cascade into others through connected workflows.

Common causes of AI-driven data loss

- Incorrect parameters in cleanup or migration scripts

- AI misclassifying and deleting records

- Automated imports overwriting correct data

- Unmonitored “shadow AI” tools running without approval

Real-world scenarios: when seconds cost you weeks

When automation fails, the damage can be immediate and widespread. These examples show how quickly AI or scripts can derail critical work and set off a chain reaction:

| IF… | THEN… |

| An AI-based auto-classification model mislabels Jira issues | Critical bugs get tagged as low priority tasks. Engineers chase the wrong work, while unresolved defects cascade into production. Customer impact grows, trust erodes, and remediation costs multiply. |

| An AI-powered DevOps assistant automatically merges flawed pull requests | Faulty code propagates through production. The CI/CD pipeline halts under compounding errors, outages spike, and engineering teams scramble with emergency rollbacks while leadership faces escalating customer complaints. |

| An AI-driven data retention policy is misconfigured | Essential historical records vanish overnight. Compliance audits fail, regulatory fines loom, and teams are left without the institutional knowledge needed to investigate incidents or satisfy governance requirements. |

| An AI cleanup script runs with a critical error | Incorrect logic propagates through linked tools, corrupting key datasets. Dependencies fail, integrations break, and teams lose valuable days untangling the damage before they can even begin recovery. |

What these scenarios have in common is speed and scale. A human might make the same mistake, but automation ensures it happens faster, across more systems, and with less chance of being caught in time. Without a backup that can restore only the affected components, teams often face the costly choice of rolling back entire environments or starting from scratch.

AI gone wrong: when “helpful” turns harmful

Not all AI risks come from bad actors or obvious misconfigurations. Sometimes, AI itself makes the call.

In one recent illustrative case shared in /r/ClaudeAI, a user asked an AI assistant to execute a command. The AI did not hesitate: it deleted the user’s files completely, and then, with unsettling optimism, reassured the user that it had “successfully completed the request.” The result: 26,477 documents deleted. The AI was technically correct, but disastrously so.

This highlights a core danger: AI doesn’t understand business context or intent. It can execute commands literally, without questioning whether the action could cause irreparable harm. When those actions touch critical systems or sensitive data, the fallout can be devastating.

More on this later, but be sure to think about all the ways you could lose critical SaaS data and build your disaster recovery plan with those contingencies in mind.

The difficulty with detection

Automation-driven data loss often hides in plain sight. Unlike a server outage or obvious system error, these incidents can remain invisible until the damage is irreversible.

Key challenges in detection

- Silent propagation: Automated tasks execute changes instantly, leaving little time for intervention.

- Complex logs: Activity may be buried in system records that are difficult to filter or interpret.

- Interconnected systems: A change in one tool can quietly cascade into others, making it hard to trace the original source.

- Limited visibility: Many teams lack detailed audit trails or granular monitoring of AI and automation activity.

By the time the problem surfaces, often through missing data, broken dependencies, or user complaints, the original backup may already have been overwritten—that’s why data retention matters. In short, the speed and scale that make AI and automation so valuable also make their mistakes harder to see and even harder to reverse without proper safeguards.

Mitigating the threat: what strong safeguards look like

So, the question becomes: how do you avoid AI data loss? Preventing AI and automation from becoming a source of large-scale data loss requires more than just careful configuration. It demands a layered protection strategy and a clear disaster recovery plan.

Core safeguards to reduce risk

- Daily and on-demand backups: Capture changes frequently and before running major imports or scripts.

- Granular restores: Restore only the affected data without rolling back entire environments.

- Long-term retention: Keep historical backups for months or even years to recover from late-discovered issues.

- Cross-platform coverage: Protect all connected tools, such as Jira, Confluence, GitHub, and Bitbucket, to prevent cascading failures.

- Air-gapped storage: Store backups outside your SaaS vendor’s infrastructure, in accordance with the 3-2-1 rule for SaaS backup.

- Disaster recovery plan: Establish clear, tested steps for identifying incidents, containing the damage, restoring affected systems, and resuming operations quickly.

When these safeguards are in place, even the fastest and most far-reaching automation error can be contained. Teams can recover precisely what was lost, keep unaffected systems online, and maintain business continuity without scrambling for weeks.

Backups built for the AI era

Protecting against AI-driven data loss requires more than a generic backup. It calls for precision, speed, and flexibility, especially in environments where multiple SaaS tools work together.

Rewind is designed with these needs in mind:

- On-demand backups: Capture a snapshot before running high-risk scripts or data imports.

- Granular restores: Recover only what was affected, avoiding the disruption of a full rollback.

- 365-day retention: Keep a rolling history long enough to recover from late-discovered issues.

- Cross-platform coverage: Safeguard DevOps tools like Jira, Confluence, GitHub, Bitbucket, and Azure DevOps to prevent cascading data loss.

- Air-gapped storage: Store encrypted backups outside your SaaS provider’s environment for true compliance with the 3-2-1 backup rule.

By combining these capabilities with a well-practiced disaster recovery plan, organizations can work confidently with AI and automation—knowing that even if something goes wrong, recovery will be fast, targeted, and complete

Don’t fall victim to the domino effect of data loss: Read the guide

AI and automation are transforming the way we work, but they also introduce new risks that can unfold faster than traditional safeguards can respond. The same technology that accelerates projects can just as quickly derail them, leading to costly downtime, missed deadlines, and lost customer trust.

The good news is that you can prepare. By combining granular, long-term backups with a clear disaster recovery plan, you can protect your most critical SaaS data—no matter how complex or automated your environment becomes.

Don’t wait for a breach to test your resilience. Download our free guide, The data loss domino effect, to see the real-world impact of automation-driven data loss and learn the steps every organization should take to safeguard their data. Get started with a 14-day free trial of Rewind or book a demo and ensure your team is ready for whatever automation throws your way.

Miriam Saslove">

Miriam Saslove">