Version control systems are an integral part of modern software development workflows. Your Git repositories record the complete history of changes made to your code, allowing you to compare and revert to older versions.

As a distributed system, Git also makes it easy to synchronize repositories between different machines. Running git clone is the first step when you want to contribute code to projects stored on platforms such as GitHub, GitLab, and Bitbucket. Cloning a repository produces an independent copy that includes all previous file revisions, ready for you to iterate upon.

While git clone is an easy way to copy repositories, it shouldn’t be relied upon as a backup solution. Cloned repositories aren’t designed for this purpose. They can be incomplete, are difficult to store, and pose problems during disaster recovery. In this article, you’ll learn why you shouldn’t use git clone for backups and explore some best practice backup steps to take instead.

Why relying on git clone as a backup strategy is a bad idea

Cloning a repository gives you a copy of its content, including commits and branches. Your local Git client downloads the remote repository, then checks out its main branch into a new directory on your machine.

Cloning is usually a one-time operation, performed when a developer begins working on a project. After the initial clone, you can synchronize changes made by other collaborators using the git pull and git fetch commands:

# Clone the repository

$ git clone ssh://example@example.com/my-project/api.git

...

# Enter the repository's directory

$ cd api

# Get new changes from the server

$ git pull

A clone gives you the repository data you need to begin developing on a new machine. However, git clone is not designed to provide a complete backup of all the data in the repository. It’s an operation for developers to use as part of their setup workflow.

Access to a clone ensures you can recover the latest state of your source, but it doesn’t guarantee you can restore the remote server environment such as GitHub, GitLab, or Bitbucket after a disaster.

Incomplete backups

Running git clone downloads the data within the remote repository you specify, but it won’t fetch all the metadata that defines the server’s view of that repository.

Cloned repositories contain the commits, trees, branches, tags, and file blobs that exist inside the repository. You can use this data to access code, move between current branches and tags, and inspect previous commits. But clones omit other kinds of data that exists on the server, such as remotes, notes, and tracking branches configured in the server’s version of the repository. This means the clone can’t be used as a direct replacement for the server’s repository after a disaster occurs.

Running git clone with the --mirror flag (git clone --mirror) captures more server metadata in your local clone, including the remotes and notes. Every ref present on the server will become part of your clone.

Even --mirror will omit important platform-specific data. Although Git is a decentralized system, most teams now rely on a central service such as GitHub, GitLab, or Bitbucket to store project management data alongside their repositories. Issues, pull requests, comments, and wikis are all treated as part of the project’s repository, but they’re stored separately by the platform. You’ll be unable to recover this data when your only backup strategy is git clone.

Potential for data loss

Using git clone as a backup strategy raises the possibility of data loss. Besides the obvious risks of crucial metadata being excluded, the git clone command can encounter difficulties when the repository’s being actively used.

New commits made while the clone’s running may not reach your local machine. There’s no built-in mechanism to lock a repository while a clone completes, which prevents you from asserting that clones are an accurate point-in-time copy. One way to mitigate this is to adjust your backup script to run git pull after the clone, but further changes could still be added during that pull.

Clones and pulls allow developers to retrieve the latest state of code, ready to iterate upon by making changes to them that will be merged later on. By contrast, backups should be complete replicas of the repository at the time they’re created.

File corruption

A Git repository consists of thousands of tiny files that represent its refs and objects. Such a large number of files creates a risk of corruption if the network drops during a clone or a file-system-level error occurs.

Cloning also requires technologies used by the repository to function on both the local and remote Git clients. For example, Git LFS is often used to store large binary data such as images and documents, but it can lead to corrupt repositories when a client’s unable to reach the LFS API to download files during a clone.

Security risks

Cloning repositories as part of a backup strategy can be a security risk. It expands the repositories’ potential audience because a new copy of the repository enters existence.

You have to open up access to the host Git server so the backup script can complete the clone. The machine that runs git clone must also be properly secured to prevent the repository’s content from being accidentally exposed.

To mitigate this risk, avoid backing up repositories using git clone. It’s safer to push the repository from the host server to your backup storage directly using a dedicated solution.

Best practices for backing up your code

Now that you’ve seen some of the issues with using git clone for backups, here’s how to address the problems with a more methodical approach.

Have a backup strategy

A deliberate backup strategy allows you to understand how your backups are made, what’s included, and the steps to take to restore them. Too many teams assume their repository clones will fulfill their backup requirements but haven’t assessed the drawbacks of the approach.

To devise a strategy, first identify which data needs to be included, where it will be stored, and how long you will retain it. You can then look for technical solutions to implement your strategy, such as regular pushes of your repository to a remote location or a scheduled platform-level backup using a tool such as Rewind.

Test your backups regularly

Backups are only useful if they work during a disaster. Unfortunately, 26% of backup strategies fail when they’re called upon for restoration.

For this reason, it’s critical that you regularly test your backups by rehearsing your disaster recovery procedures. Check that you can access your backups and restore them over the live versions of your repositories. Inspect the recovered content afterward to ensure your data has been correctly reinstated.

Resolve any coverage gaps that you identify by amending your backup strategy to include the additional data types you require. Afterward, repeat your restoration test to check you’ve now got a functioning backup of your repositories.

Store backups separately

Backups should be stored separately from the repositories that store your code. Keeping backups on the same server as your repository renders them useless in the event of hardware failure or a security breach such as ransomware, where data is destroyed. Instead, use a dedicated off-site storage solution to maximize redundancy.

In highly sensitive situations, it’s a good idea to replicate backups across multiple storage providers (such as Amazon S3 and your own server) in case one of them is unavailable during a disaster. This further reduces the risk of backups being unobtainable when they’re required.

Capture project metadata, not just your code

Finally, remember that modern-day repositories are more than just the code within them. Access to a cloned .git directory isn’t enough to put your GitHub, GitLab, or Bitbucket account back to the state it was in before an incident occurred.

Ensure you’re backing up your issues, comments, and activity history so your team’s plans and discussions aren’t lost after a disaster. Similarly, it’s important to include documentation, CI/CD and IaC configurations, and any other critical assets in your backups. Focus on backing up your entire development environment, not just code that can prove unusable if restored in isolation.

Conclusion

Source code is a software company’s most valuable asset. Consequently, it’s imperative that all code is subject to a robust backup regime that leaves no room for error.

Relying on git clone is an inadequate strategy. A standard clone omits potentially useful data such as remote tracking branches. Adding the --mirror flag is more comprehensive but still only captures your repository’s content, without critical platform-specific metadata.

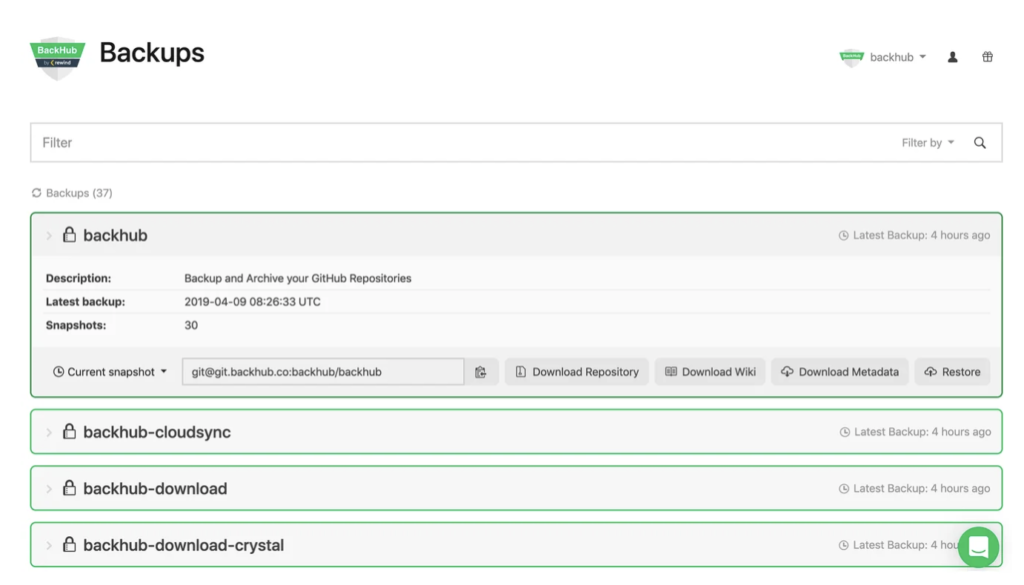

You can comprehensively back up your GitHub repositories using Rewind Backups, an automated backup platform. Rewind captures the contents of your repositories as well as issues, pull requests, and other GitHub data. You can store backups securely in cloud storage, then rapidly restore them to your GitHub account with Rewind’s self-service portal. Rewind also supports on-demand exports of Bitbucket repositories as well as backup and recovery solutions for popular SaaS platforms.

Protect your Git data without relying on flaky git clone scripts with a dedicated backup and recovery solution.