At Rewind, we use Sidekiq for almost all of our background processing jobs for our customer backups. It’s a super reliable, highly scalable job processing engine that gives us a lot of control over backup jobs and enables us to break large backups down into small units of work.

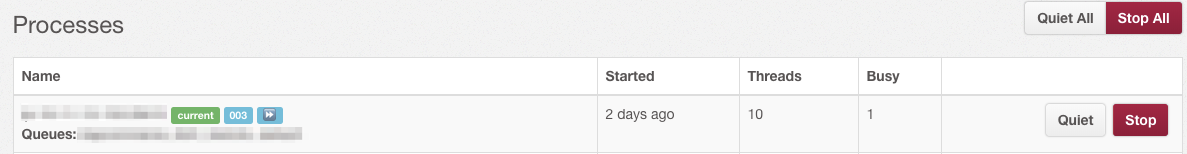

One feature of Sidekiq is the ability to quiet workers which you generally want to do before any action that would involve the Sidekiq main process being restarted. Quieting a worker will allow it to continue to process any work in progress but prevent any new tasks from being started. Because Sidekiq uses Redis as it’s data store, no work items are lost but rather they are just enqueued until a worker becomes available again. It looks something like this in the Sidekiq console:

In our case, we want to quiet the workers when we deploy new code to the applications’ running workers. And we have a lot of them running on Elastic Beanstalk across many regions. We’d also like specific users to be able to quiet workers using tooling on their own machine, or in future from a chatbot.

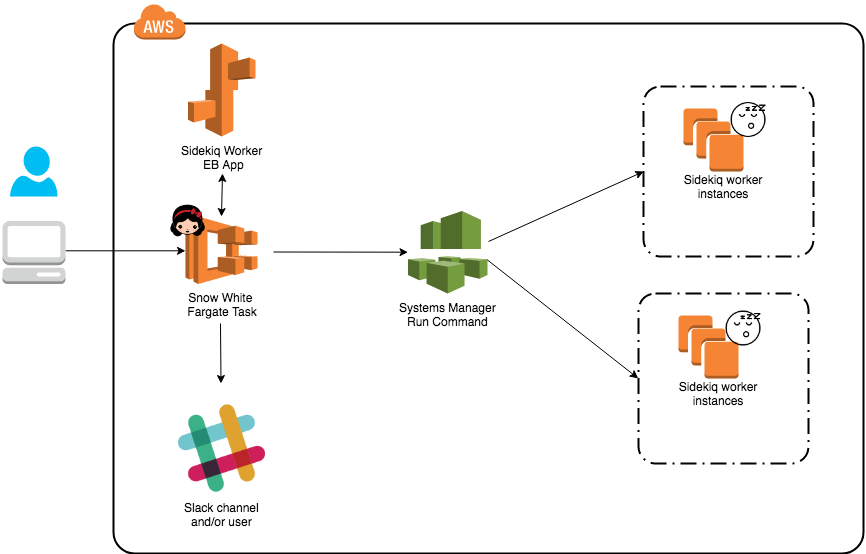

We came up with a solution to do this using a Docker container running under AWS Fargate combined with AWS Systems Manager Run Commands. It works rather well and has limitless scalability due to the “no limits” capability of Fargate. Read on for the details of exactly how it works.

Quiet, Sidekiq

Image courtesy of www.clker.com

Sidekiq provides the web console to quiet the workers, but it turns out there is a much easier way to do things if you have access to the instance running the worker – Signals. In this case, sending a TSTP signal will quiet the worker and prevent it from processing new items. A TERM signal can also be sent to cause the worker to shutdown.

Armed with these 2 signals (and the fact that our Sidekiq workers are started by upstart and will auto-restart if they exit), we just had to find a way to send these signals to EC2 instances started by Elastic Beanstalk and wait until the workers had completed all their running tasks.

The Catches

There are a couple of catches/problems that led to the eventual solution:

1) When Sidekiq workers are quieted, they continue to process any work in progress before being actually quiet. Some of these work items are very quick but some can run for a while or there can be a lot of work items in progress. This means that whatever we use to monitor if the worker is really quiet needs to run for a potentially long duration. It was due to this requirement that the main trigger is a Fargate task and not a Lambda function since Lambdas can only run for a maximum of 15 minutes and we want to guarantee we do not kill active workers.

2) How to actually run commands on the EC2 instances in a secure manner? I’d had some experience in the past with SSM Run Commands and as luck would have it, the SSM agent is now installed by default with Elastic Beanstalk environments. SSM Run commands allow you to run a command on an EC2 instance and it can be access controlled with standard IAM policies. Further, more complex SSM commands are now possible with the addition of the download action which can download command scripts from GitHub.

3) The solution can notify users in Slack when workers have finished all their work and are truly quiet. But how to find out the Slack user to notify? A new feature in IAM is the ability to add tags to IAM users so we can store the Slack userID as a tag on an IAM user.

Snow White

Image courtesy of www.clipartmax.com

Enter Snow White. Snow White ate the apple and slept for a while. Well, the Sidekiq workers get a visit from Snow White and have a sleep (go quiet). So what does the Snow White solution look like and what are the main components?

The fargate task is a Python/boto3 script containerized which does the following:

- Find the list of instances that we need to take action on. It accepts a pattern for Elastic Beanstalk environments to look at (ie. all of our worker environments in a given EB application contain the name workers). So there can be many instances across many environments in a given EB application.

- Once we have a list of instances, invoke the appropriate SSM run command (quiet or wake) on all of the instances (more on the commands below)

- Poll SSM for command status until it is complete (either successfully or in error)

- Send a notification message to Slack – either to a channel or the invoking user or both.

The SSM run commands are quite small because they use a newish feature of Run commands which allows the actual bulk of the command to reside externally. In our case, they are in the same GitHub repo as the main container. This allows us to have a run command document that looks like this:

description: "Quiet Sidekiq Workers"

schemaVersion: "2.2"

parameters:

executionTimeout:

default: "7200"

description: "(Optional) The time in seconds for a command to be completed before\

\ it is considered to have failed. Default is 3600 (1 hour). Maximum is 172800\

\ (48 hours)."

type: "String"

allowedPattern: "([1-9][0-9]{0,4})|(1[0-6][0-9]{4})|(17[0-1][0-9]{3})|(172[0-7][0-9]{2})|(172800)"

mainSteps:

- inputs:

sourceInfo: "{ \"owner\":\"rewindio\", \"repository\": \"snow-white\", \"path\"\

\ : \"ssm/quiet_sidekiq_workers.sh\" }"

sourceType: "GitHub"

destinationPath: "/tmp"

name: "downloadScripts"

action: "aws:downloadContent"

- inputs:

workingDirectory: "/tmp"

timeoutSeconds: "{{ executionTimeout }}"

runCommand:

- "ls -l"

- "chmod a+x /tmp/quiet_sidekiq_workers.sh"

- "/tmp/quiet_sidekiq_workers.sh"

name: "quiet_workers"

action: "aws:runShellScript"

precondition:

StringEquals:

- "platformType"

- "Linux"

A couple of things are worth noting here because they tripped me up while developing this solution:

1) If you use multiple mainSteps, you cannot then use the get_command_invocation boto call or CLI command. It just fails with an error. You MUST use list_command_invocations and set the Details tag to True to actually get the status of a command execution

2) Even though I’d specified the command document in YAML, the sourceInfo attribute can only be specified as JSON.

3) In order to increase the execution timeout from it’s default of 1 hour, you must pass in the timeoutSeconds as parameter. If you specify a hard-coded value under timeoutSeconds it is ignored. In the words of OMC – How Bizarre!

The final piece of the puzzle is how this all gets invoked. All that is needed to do is start the Fargate task with some arguments. To this end, a small helper script (snow-white.sh) exists in the repo. This uses the AWS CLI to start the task but the task can be started using any method (Lambda, boto, etc.) In this way, this solution could easily be extended to be a chatbot by just having an API Gateway/Lambda which starts the Fargate task.

How is this all packaged?

Everything running in AWS is packaged in a Cloudformation template. Cloudformation is perfect for this solution because it enables us to package the Fargate task definition, it’s IAM roles and all of the SSM documents in one neat template. You can find it in the GitHub repo.

The full source for this solution (including instructions how to test this locally) is available on GitHub: https://github.com/rewindio/snow-white

For more information about Rewind, please head on over to rewind.com. Or, learn more about how to backup Shopify, backup BigCommerce, or backup QuickBooks Online.

We’re hiring. Come work with us!

We’re always looking for passionate and talented engineers to join our growing team.

View open career opportunities

Dave North">

Dave North">