At Rewind, nothing is more important to us than the ability for our customers to quickly recover from a data disaster. Fast, accurate and reliable application search is what allows our customers to be able to find items within their vault and recover their data.

Since the beginning, we have offered the ability for our customers to search their vault, but what powers that search has changed substantially over the years. Initially we picked a few attributes from the items we backed up and stored them in a SQL database. The application would then query this database using a wildcard query, matching on whatever it could. This setup worked reasonably well in the early days, but we quickly outgrew it as we continued to scale. The database was becoming overwhelmed by the queries, resulting in a slow experience for the customer. Worse yet, the database being under such stress caused our backups to also slow down. We continued to scale up our database to combat these issues, but we knew that we needed a better solution for the long run.

Enter Elasticsearch

We needed something that would not only return results fast, but also results that are truly relevant to the customer’s query. Additionally, customers wanted to search by more than just the handful of attributes we stored, so the solution had to be able to handle larger payloads.

With those requirements in mind, we determined Elasticsearch was the solution for us. Elasticsearch powers some of the best application search out there, and its massively scalable architecture, along with its ability to improve result relevancy via document scoring, ticked all our boxes. The question was, how do we use this thing?

Diving in head first

We began by getting familiar with the basic concepts of Elasticsearch; things like mappings, indices and shards. After thinking we had wrapped our heads around the basics, we began implementing a solution.

Index Structure:

[account_id]/[item_type]/[item_id]

Our initial solution was to have an index for each and every account and separate each of the item types by making use of the _type field that was available in Elasticsearch 5.X. The documents stored in Elasticsearch were similar to the JSON that would be backed up from a platform, with a few fields that wouldn’t be useful for search omitted. Documents were fed into Elasticsearch directly from our backup engine and we created a basic query that our search function would utilize. We were off to the races! Or so we thought…

Do you smell smoke?

We launched this solution to power the search for our new QuickBooks Online vault. The solution seemed to hold up well, and during that time we decided to bring it to all of our other platforms. Our Shopify vault contained far more data and more users searching than the other platforms at the time – so once we flipped the switch, the fires began.

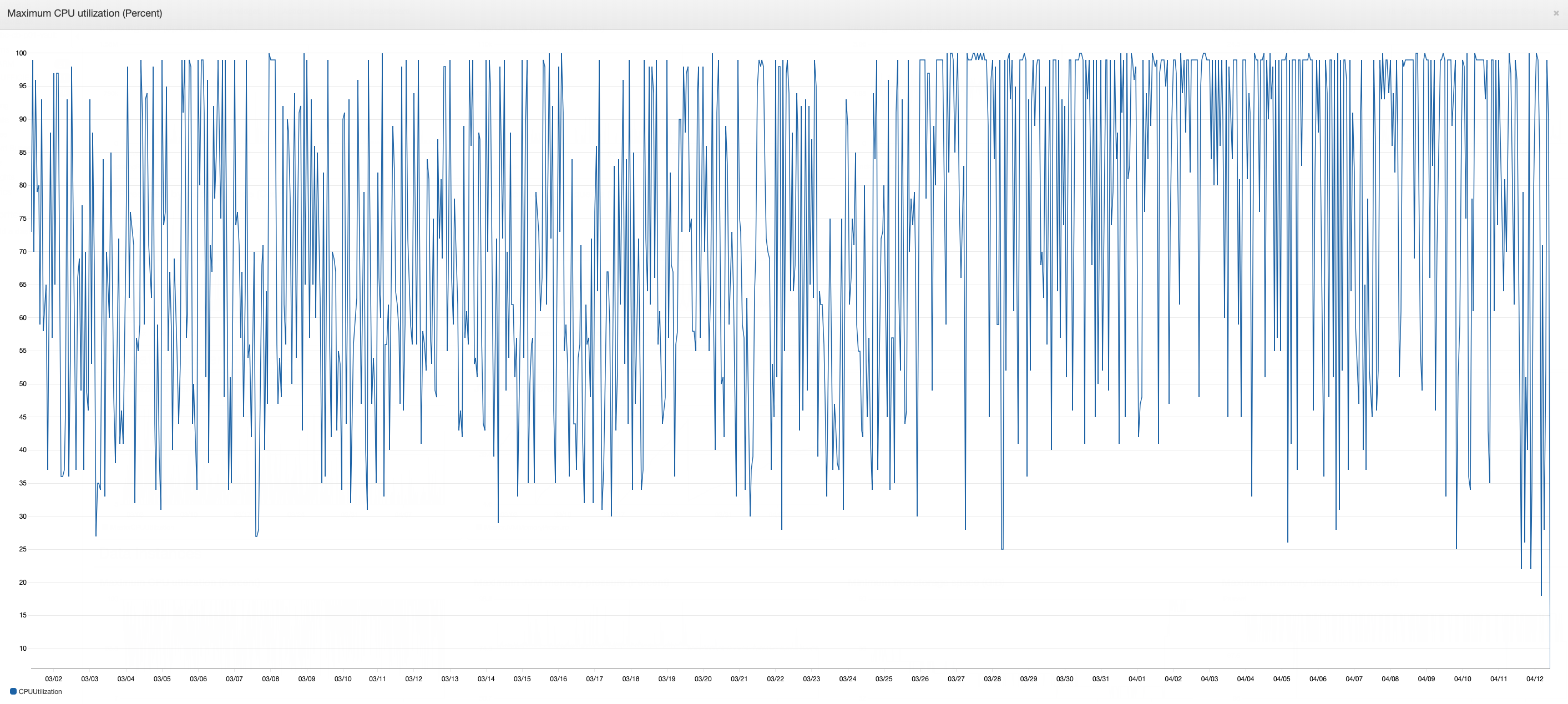

Almost instantly our cluster’s CPU usage went from hovering in the low 20% to constantly hitting 100%. Memory pressure went through the roof, and the cluster status constantly was going into the red.

What’s going on?

Every time a customer installed our application, the cluster would become unstable. We knew that when a new customer had their first backup performed, we were creating a new index for them. This action of creating an index caused our cluster to become unstable for brief periods of time, and therefore unable to handle new indexing or search requests. This instability cascaded down to our backup engine, causing it to slow down significantly because it was attempting to save data into our cluster. This brought back a lot of deja vu from our SQL database searching days.

Why would the simple act of creating a new index cause such issues to our cluster? Elasticsearch powers companies that run at a much larger scale than ours, so how could it be that our cluster was crumbling before our eyes? We needed a fix and fast, so naturally we threw more money at it by massively scaling up the cluster to see if that would solve our problems; it did, for a little while at least…

As time went on, and money went out the window, we realized that what we built once again did not scale. We needed to take a step back and get a more in-depth understanding about the technology before we could find out how to fix it.

Our takeaways

Our deep dive into Elasticsearch left us with 4 big takeaways that we made sure to work into our redesigned search architecture:

Indices aren’t free

We shot ourselves in the foot right from the start with our index structure, because every time a customer installed, a new index was being created. These indices are made up of shards, by default 5 primary shards with 1 replica of each primary shard; that’s a total of 10 shards. If you had a cluster with 100,000 indices, you’d be looking at a million shards. So what? Well shards take up memory; this means that the more shards you have, the more RAM your node will need. Each of our indices contained a relatively small amount of data, but the sheer quantity of indices we had meant that our boxes were left with little working memory to actually perform ingest and search operations.

Additionally, every time an index was created, the cluster performed a rebalancing of shards. This operation looks at the weight of the shards across the entire cluster and moves shards across nodes when one or more nodes are found to be holding more data than others. This is an expensive operation that happened each time a customer installed our app.

Finally, Elasticsearch keeps mapping information for each index in what is known as the cluster state. This state is also kept in memory and each time it’s updated it must be done by a single thread, for integrity reasons, before it’s propagated to the rest of the cluster. With the amount of indices we had, this cluster state was huge and constantly being updated each time a new index was added, which killed our cluster’s performance.

Buffer your writes

Our initial design had our system writing directly to our Elasticsearch indexing endpoint. While this got us off the ground quickly, it inevitably caused headaches down the road. Our backup engine would have to slow itself down in order to be able to make requests to index the data in Elasticsearch and often wouldn’t even be successful in doing that! If the cluster was in an unhealthy state, we wouldn’t be able to index the data. This meant that while we did backup an item for a customer, they would not be able to search for it. We had workarounds in place that would reconcile the data stored in our vault with what is in Elasticsearch in order to deliver on the experience the customer deserves until we could figure out how to fix our architecture.

Adding a queue system to buffer our writes to Elasticsearch meant that our backup engine could hand off the responsibility of writing the document to the buffer and not be slowed down when the cluster came under load. If the cluster were to enter an unhealthy state, this buffer can retry the call until the data makes its way in, with appropriate backoff logic in place.

Control your mappings

In Elasticsearch, the schema is defined by the mappings.The mappings describe the data type of each attribute in your JSON object, and how they should be indexed for searching. Out of the box, Elasticsearch makes use of “Dynamic Mappings” which automatically guesses the data type of the attribute. This makes it super simple to get started and allows you to not have to worry about updating your mapping file each time you add a new attribute to index.

Mappings however are not free. If you constantly add new unique attributes into Elasticsearch, your mappings file will continue to grow. As your mappings grow, searching and indexing performance will begin to slow. When you blindly store JSON objects in Elasticsearch with dynamic mapping enabled, you can easily run into a “mapping explosion” where your mapping file becomes too big for the cluster to handle. Controlling what data you send to Elasticsearch and having a static mapping file both can help avoid such an explosion.

Plan to reindex

Some questions may start circling your mind when you first get started with Elasticsearch, such as:

- How many shards should I have per index?

- How big should my indices be?

- What type of analyzers should I use to index my data for search?

There’s no perfect answer for these questions and chances are you’re not going to get things right the first time. When we realized how wrong we got things the first time, we knew we’d have to build mechanisms into our application that would allow us to reindex our data in such a way that wouldn’t require us to bring everything down. We know that problems will come down the road, so when they do, we have multiple mechanisms in place that will allow us to reindex data with minimal downtime to the end user.

Elasticsearch is a powerful tool with a low barrier to entry. While we viewed the ability for us to get started without much of a learning curve as a blessing, we later found it to be a curse. It’s easy to go down a path that’s doomed to fail and you may find yourself restarting your implementation from scratch. However, taking time to understand the technology and planning to recover from failure will set you up for success. What started as a headache for us has now turned into a massively scalable system that we continue to enhance to power more than just our application search.

We’re hiring. Come work with us!

We’re always looking for passionate and talented engineers to join our growing team.

View open career opportunities

Sean Mandegar">

Sean Mandegar">