At Rewind, we have a requirement to remove data from AWS S3 based on an external time criteria. We settled on using S3 batch and some tooling around this to handle the removal of the data “automagically” using tagging and S3 content lifecycle rules. Here’s how we did this.

S3 Batch

S3 batch is an AWS service that can operate on large numbers of objects stored in S3 using background (batch) jobs. At the time of writing, S3 batch can perform the following actions:

- Tagging

- Copies

- ACL updates

- Restores from Glacier

- Invoke a Lambda function

The idea is you provide S3 batch with a manifest of objects and ask it to perform an operation on all objects in the manifest. Batch then does its thing and reports back with a success or failure message and reports on objects which succeeded or failed.

Conspicuously missing from the list of actions is delete. Batch cannot delete objects in S3. It can invoke a Lambda function which could handle the delete of the object but that adds extra costs and complexity. So, how do we handle deletes? Tagging is the answer.

Batch tagging and lifecycle rules

S3 bucket lifecycle rules can be configured on:

- An entire bucket

- A prefix in a bucket

- A tag/value

The tag filter is exactly what we need when combined with the S3 batch action to add tags. We can now plug this all together to create the final solution, still using Fargate spot containers to distribute the work of creating many S3 batch jobs.

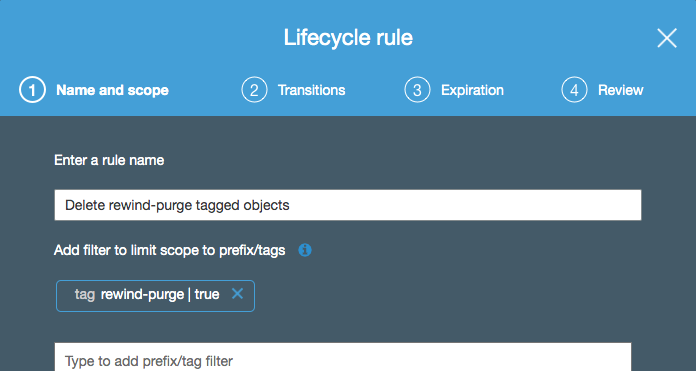

Create a lifecycle rule

The first step is to create a lifecycle rule on your bucket that matches based on the tag to use.

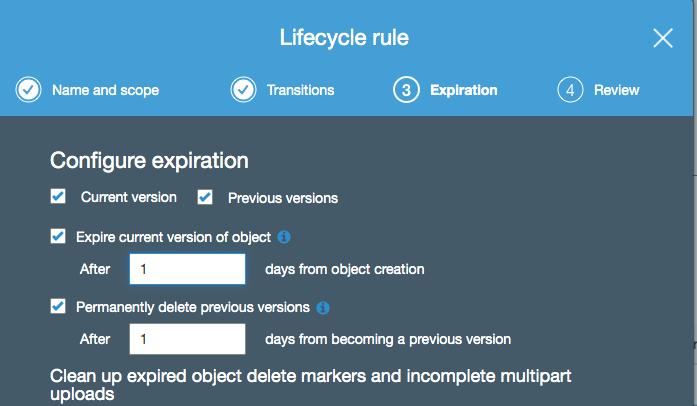

Here, we are saying this lifecycle rule will trigger on any content in the bucket that is tagged with a name of rewind-purge and a value of true.

The actual expiration is configured in the rest of the lifecycle rule. In our case, we can expire after 1 day since the process generating the list of objects to purge has already taken some buffer time into account.

A single rule is all that is required on the S3 bucket since it is simply taking action on objects tagged by batch.

Create S3 batch jobs

Just as in version 1 of the solution, everything is written using bash wrapped around the AWS cli. Here are the core commands you’ll need in order to submit jobs to batch

Create a manifest file

The manifest file format is a simple CSV that looks like this:

Examplebucket,objectkey1,PZ9ibn9D5lP6p298B7S9_ceqx1n5EJ0p Examplebucket,objectkey2,YY_ouuAJByNW1LRBfFMfxMge7XQWxMBF Examplebucket,objectkey3,jbo9_jhdPEyB4RrmOxWS0kU0EoNrU_oI Examplebucket,photos/jpgs/objectkey4,6EqlikJJxLTsHsnbZbSRffn24_eh5Ny4 Examplebucket,photos/jpgs/newjersey/objectkey5,imHf3FAiRsvBW_EHB8GOu.NHunHO1gVs Examplebucket,object%20key%20with%20spaces,9HkPvDaZY5MVbMhn6TMn1YTb5ArQAo3w

There are 2 important notes about the manifest:

- If using versioning, you must specify each version ID

- The object key must be URL encoded

Handily, the AWS cli can be used to generate the manifest for a given prefix. The tricky thing is if your prefix contains a lot of files, you must use paging or the cli will consume all memory and exit. This bash function pages the results and produces a manifest compatible with S3 batch

create_s3_prefix_manifest_file()

{

bucket=$1

prefix=$2

max_items=10000 tempfile=$(mktemp /tmp/objects.XXXXXXXXXXXX)

manifest_file=$(mktemp /tmp/manifest.XXXXXXXXXXXX) aws s3api list-object-versions

--bucket "${bucket}"

--prefix "${prefix}/"

--encoding-type url

--max-items "${max_items}"

--output json > "${tempfile}" # Write this data set to the manifest file

jq -r '.Versions[] | {Key, VersionId} | join(",")' "${tempfile}" | sed -E "s/^/${bucket},/g" > "${manifest_file}"

# Do we have a nexttoken?

next_token=$(jq '.NextToken' "${tempfile}") if [ -n "${next_token}" ] && [ "${next_token}" != "null" ]; then

# more data to retrieve

while [ "${next_token}" != "null" ]

do

aws s3api list-object-versions

--bucket "${bucket}"

--prefix "${prefix}/"

--encoding-type url

--max-items "${max_items}"

--starting-token "${next_token}"

--output json > "${tempfile}" next_token=$(jq '.NextToken' "${tempfile}") # returns the literal 'null' if there is no more data # Write this set to the manifest

jq -r '.Versions[] | {Key, VersionId} | join(",")' "${tempfile}" | sed -E "s/^/${bucket},/g" >> "${manifest_file}"

done

fi # Check what we have in the manifest file

if head -1 "${manifest_file}" | grep -q "null"; then

# No results

manifest_file="None"

elif head -1 "${manifest_file}" | grep -q "None"; then

# Prefix exists but has no objects under it

manifest_file="None"

elif [ ! -s "${manifest_file}" ]; then

# Just no data returned at all

manifest_file="None"

fi rm -f "${tempfile}" echo "${manifest_file}"

}

One key piece here is using the --encoding-type url option to the cli to url encode the object keys. jq and sed are then used to format the object version list into a manifest format that S3 batch needs.

Upload the manifest to S3

The manifest file must exist in an S3 bucket. It does not have to be the same bucket as the objects you’ll be manipulating. Further, you will need the tag(unique ID) of the manifest file in S3 when creating the batch job

aws s3 cp /tmp/my-manifest.csv s3://batch-manifests/manifests/my-manifest.csv

Once the file is uploaded, you can obtain the etag using this cli command

etag=$(aws s3api head-object --bucket batch-manifests --key manifests/my-manifest.csv --query 'ETag' --region us-east-1 --output text |tr -d '"')

We’re almost there. Just a couple of other pieces of information to go.

AWS Account ID / Unique ID

S3 batch needs our AWS account ID when creating the job. This can be obtained using the AWS client.

account_id=$(aws sts get-caller-identity --query 'Account' --output text --region us-east-1)

Batch also needs a unique client request ID. The uuidgen Linux utility can generate this for us. cid=$(uuidgen)

IAM Role

Similarly to most AWS services, S3 batch requires a role in order to make changes to objects on your behalf. Here are the required IAM actions to allow S3 batch to tag objects and produce its reports at completion.

Statement:

- Effect: Allow

Action:

- s3:PutObjectTagging

- s3:PutObjectVersionTagging

Resource: "*"

- Effect: Allow

Action:

- s3:GetObject

- s3:GetObjectVersion

- s3:GetBucketLocation

Resource:

- batch-manifests/manifests/*

- Effect: Allow

Action:

- s3:PutObject

- s3:GetBucketLocation

Resource:

- batch-manifests/batch-reports/*

Create the S3 batch job

We can finally create the S3 batch job.

batch_job_id=$(aws s3control create-job

--region us-east-1

--account-id "${aws_account_id}"

--operation '{"S3PutObjectTagging": { "TagSet": [{"Key":"purge", "Value":"true"}] }}'

--manifest "{"Spec":{"Format":"S3BatchOperations_CSV_20180820","Fields":["Bucket","Key","VersionId"]},"Location":{"ObjectArn":"${manifest_arn}","ETag":"${manifest_etag}"}}"

--report "{"Bucket":"arn:aws:s3:::batch-manifests","Prefix":"batch-reports", "Format":"Report_CSV_20180820","Enabled":true,"ReportScope":"AllTasks"}"

--priority 42

--role-arn "${S3_BATCH_ROLE_ARN}"

--client-request-token "${cid}"

--description "Auto-tagger job for MYBUCKET"

--query 'JobId'

--output text

--confirmation-required)

There are a lot of options in this command so let’s have a look at them one by one:

- account-id — this is your AWS account ID which we retrieved using the AWS cli earlier.

- operation — the action you want S3 batch to perform. In the case of tagging, it should be the tag(s) and their values. Note that tags are case sensitive so they should match the value used for the lifecycle rule exactly.

- manifest — information on where S3 batch can find your manifest file. The full ARN and the etag of the manifest file are required.

- report — where to place job completion reports and which reports to generate. These are incredibly helpful in troubleshooting jobs where some objects are successfully operated on but some fail. A separate CSV for success and failure will be generated.

- priority — a relative priority for this job. Higher numbers mean higher priority. In our case, I’m using 42 for all jobs because we all know 42 is the answer to life.

- role-arn — the full ARN of the IAM role your S3 batch job will run with the permissions of.

- client-request-token — a unique ID for this job. We generated one earlier using

uuidgen. - query — standard AWS cli query parameter used so we can obtain the job ID to track this batch job.

- confirmation-required — when this is set, s3 batch will create the job but pause waiting for you to approve it via the console (or cli). This gives you a chance to inspect the manifest before letting things loose. Once you are comfortable, you can start to pass in

--no-confirmation-requiredto just have the job run.

That’s it! S3 batch will then do its thing and add tags to the S3 objects you’ve identified for deletion. The S3 lifecycle rule will follow suit in the background, deleting the objects you’ve tagged.

Using this strategy along with the Fargate spot army we previously wrote about allows for easy management of millions or billions of s3 objects with very minimal overhead.

Epilogue — AWS Costs

After writing and posting this, it was pointed out that this is not the most cost effective solution and can get very expensive depending on the amount of objects. Let’s break down the costs assuming 1 million objects in a single prefix:

- Creating the manifest. This is done in batches of 10,000 per call to list-object-versions. 100 list calls is $0.01

- S3 tags. S3 tags are $0.01 per 10,000 tags per month. In our case, we’re keeping the tag for 1 day but let’s assume it stays for a month. 1M tags is $10/month

- S3 batch. Batch is $0.25 per job plus $1 per million operations. $1.25

- S3 Puts. Adding a tag is a Put operation on an S3 object. 1M Put operations is $5

- Lifecycle expiry. Lifecycle jobs that only expire data are free.

Assuming this is all done in a single S3 batch job, the total cost to tag 1M objects then using S3 batch is $16.26 ($6.26 if the tagged objects are removed within a day)

Dave North">

Dave North">